The spec sheet said

18 hours of battery.

We got eleven.

Every review on this site includes a Spec Gap measurement — the distance between what the manufacturer claimed and what we actually recorded across a minimum of six weeks of real-world use. When the gap is zero, we say so. When it isn’t, we publish the number.

How this site operates

differently

Four things that are structural to how Deep In Spec works — not positioning language, but process decisions with observable consequences.

The measured distance between the claim and the reality

Every product we publish carries a Spec Gap report — a direct comparison between manufacturer specifications and our recorded measurements. The noise-cancellation headphones rated at -35dB that we measured at -22dB. The router advertised at 1.2Gbps throughput that delivered 680Mbps under real household load. We don’t soften the gap. We publish the number.

Six weeks across the actual situations people use this in

A laptop review written after three days of testing tells you what the product wants you to think. A laptop put through an eight-hour workday, a sustained video encoding session, a two-hour video call on battery, and a month of daily carry tells you whether the thermal throttling kicks in at hour four and whether the keyboard develops rattle before the warranty expires.

“Great for most users” names nobody and helps nobody

A remote designer evaluating a monitor for color-accurate work at 10 hours a day needs different information than a graduate student buying their first IPS display. Deep In Spec frames every verdict around a specific buyer type — and states clearly when a product is the right answer for one situation and the wrong answer for another.

One in four products we bought never became a review

Twenty-five percent of what we’ve purchased and tested doesn’t appear on this site. Not because we ran out of time, but because the products didn’t meet the threshold to recommend. What fails testing stays unpublished. Six previously recommended products have since been revisited and removed after long-term testing changed the verdict — and those updates are published openly.

Where we spend our testing budget

All categories

Laptops & Portable Computing

31 reviews across budget, mid-range, and premium tiers. Most recent Spec Gap finding: battery -34% below rated spec on a widely reviewed ultrabook.

Monitors & Displays

14 panels tested. Spec Gap tracked: panel brightness, color accuracy delta-E, and rated vs. measured refresh at native resolution.

Headphones & Earbuds

ANC attenuation rated vs. measured in three noise environments. -13dB gap on a flagship model that made the review uncomfortable to write.

Mechanical Keyboards

Switch polling rate and actuation consistency tested with hardware logger — not manufacturer figures.

Networking & Routers

Throughput measured at 1m, 5m, and 12m. Max-spec headline numbers rarely survive the hallway.

Storage & SSDs

Sustained write tested after cache saturation — the only number that matters in a real transfer job.

Running macOS and Windows 10 on the Same Computer

Cursus iaculis etiam in In nullam donec sem sed consequat scelerisque nibh amet, massa egestas…

Apple opens another megastore in China amid William Barr criticism

Cursus iaculis etiam in In nullam donec sem sed consequat scelerisque nibh amet, massa egestas…

The ‘Sounds’ of Space as NASA’s Cassini Dives by Saturn

Cursus iaculis etiam in In nullam donec sem sed consequat scelerisque nibh amet, massa egestas…

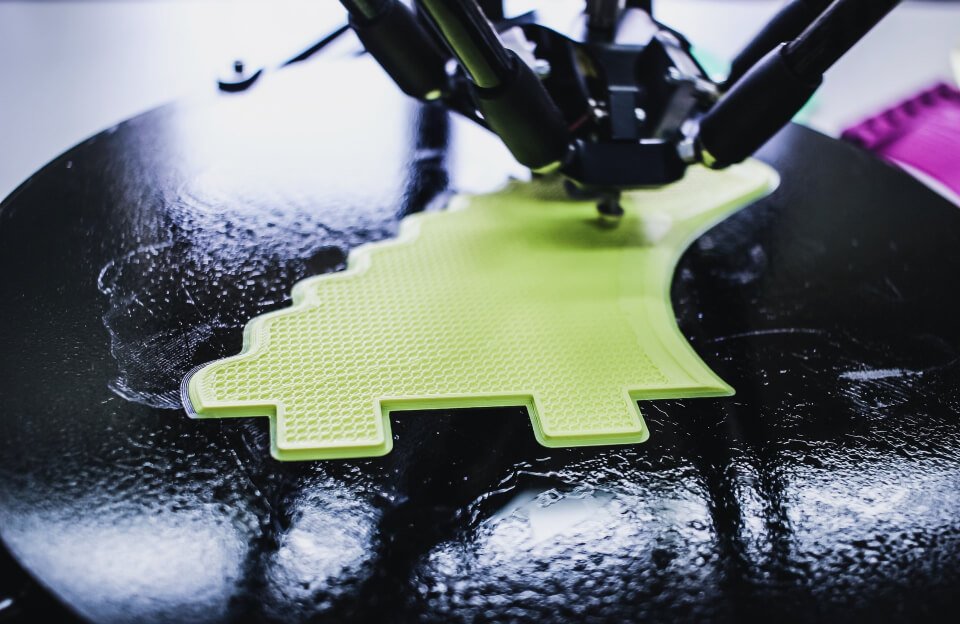

Broke a Glass? Someday You Might 3-D-Print a New One

Cursus iaculis etiam in In nullam donec sem sed consequat scelerisque nibh amet, massa egestas…

This Is a Giant Shipworm. You May Wish It Had Stayed In Its Tube.

Cursus iaculis etiam in In nullam donec sem sed consequat scelerisque nibh amet, massa egestas…

For Families of Teens at Microsoft Surface

Cursus iaculis etiam in In nullam donec sem sed consequat scelerisque nibh amet, massa egestas…

Purchased, tested, and evaluated across 10 product categories since launch — spanning budget options under $40 to professional hardware over $1,200. The number that matters more is the one below it.

Failed testing threshold. No review, no recommendation, no disclosure of time spent.

Not the average. The floor below which no verdict gets written.

Previously recommended products that long-term testing changed. Revisions published openly.

From people who read

spec sheets before buying

Ratings vary because purchasing situations vary. A 4.3 from someone who found the right product for their specific use case is more useful than a uniform 5.0 from a page that needed social proof.

The Spec Gap section on the ultrabook review is the only reason I didn’t buy the wrong laptop twice in a row. The manufacturer claimed 14h battery on their spec page. Deep In Spec recorded 9.2h under a workload that’s basically my actual day — browser tabs, Figma, a video call. That gap matters when you’re deciding whether to carry the charger. It’s the kind of number that changes the purchase, not just the review score.

I’m not someone who reads spec sheets — I was buying headphones for my daughter who is. She would have found every flaw in whatever I picked. The review here actually told me which caveats mattered for how she uses them: the ear cup seal issue at higher clamping force, and the fact that the ANC worked well for office noise but not on transit. She’s used them daily for four months. No complaints, which is the highest praise I can give.

I edit video. The advertised 3500 MB/s sequential read on the NVMe I was looking at is the cache-burst number. The review here tested sustained write after cache saturation and got 1,180 MB/s — still fast, but a completely different conversation for exporting a 90-minute timeline. That’s the number I needed and couldn’t find anywhere else.

Buy it once.

The right one.

Most electronics regret comes from buying on specs that weren’t tested. Every review here includes a direct comparison between what the product claims and what we measured — because the gap between those two numbers is usually the most important number in the review.

Why trust this over the review sites you already read? Our 25% rejection rate means the products you see here cleared a threshold. The ones that didn’t never appeared. That’s a different editorial model than publishing everything with a score attached.